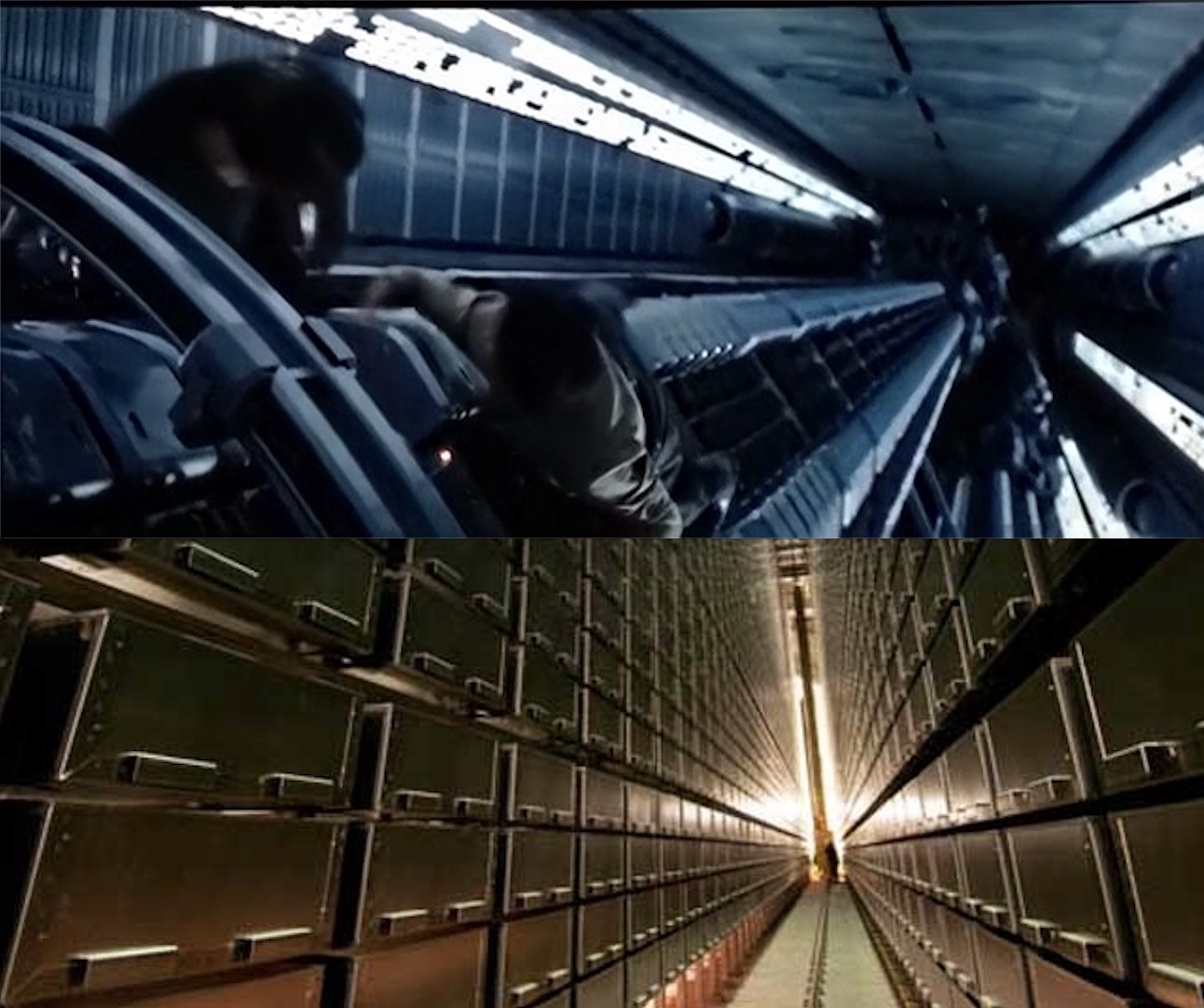

Top: The Scarif Archive in Rogue One / Bottom: Robotic storage facility in the Mansueto Library at the University of Chicago

Ever since Jyn Erso and Cassian Andor extracted the Death Star plans from a digital repository on the planet Scarif in Rogue One, libraries, archives, and museums have played an important role in tentpole science fiction films. From Luke Skywalker’s library of Jedi wisdom books in The Last Jedi, to Blade Runner 2049’s multiple storage media for DNA sequences, to a fateful scene in an ethnographic museum in Black Panther, the imposing and evocative halls of cultural heritage organizations have been in the foreground of the imagined future.

There have been scattered instances of cultural memory institutions in such films in the past—my colleagues in the library will recall, with some eye-rolling, the librarian Jocasta Nu in Star Wars, Episode II: Attack of the Clones—but the appearance of these institutions in recent speculative fiction on the screen seem especially relevant and rich, and central to their plots.

Which begs the question: Why are today’s science fiction films obsessed with libraries, archives, and museums?

The answer of course is rooted in how science fiction has always pursued a heightened understanding of our very real present. At the same time that these movies portray an imagined future, they are also exploring our current anxiety about the past and how it is stored; how we simultaneously wish to leave the past behind, and how it may also be impossible to shake it. They indicate that we live in an age that has an extremely strained relationship with history itself. These films are processing that anxiety on Hollywood’s big screen at a time when our small screens, social media, and browser histories document and preserve so much of we do and say.

Luke Skywalker’s collection of rare books in The Last Jedi neatly captures the tension inherent in these movies. In an egg-shaped stone hut reminiscent of (and indeed filmed in) the rural parts of western Ireland where Christian monasteries were established in the Middle Ages, Luke’s archive of Jedi books represent a profound bond to the traditional wisdom of the Jedi cult. Yet as the movie proceeds, it is clear that these volumes are also a strong link in the chain that holds Luke back. Ultimately his little library is not a source of knowledge, but one of angst. It makes him surly and disassociated from present possibilities, and he must ultimately sever himself from the past that is encapsulated in paper. Burning the books becomes a necessary precursor to his taking action, and to moving to the metaphysical (and more real) plane of the Jedi.

Black Panther uses two characters, rather than one, to embody the tense dynamic between setting history aside and being unable to let it go: the dueling figures of T’Challa (Black Panther) and N’Jadaka (Erik Killmonger). T’Challa understands that black people have been abused and enslaved, globally, for centuries. And yet he imagines a day when Wakanda steps beyond this past, and integrates their society and advanced technology with the outside world that has done so much wrong to them. He is a forward-looking optimist.

N’Jadaka, on the other hand, seethes with anger about the past, and how it is so vividly documented in the halls of cultural heritage institutions. Before he declines into a more monochromatic villain, he experiences frankly justifiable rage at what whites have done with black culture—namely, stolen and stored it like an alien, and lesser, culture, in glass-cased museums. A pivotal scene in one such museum reflects the troubled genesis of institutions such as the Pitt Rivers Museum, which collected artifacts of non-white culture from the British Empire to be viewed and dissected by professors in Oxford.

In one of the most memorable lines of Public Enemy’s It Takes a Nation of Millions to Hold Us Back, the seminal rap album that documents what happened to African slaves and their descendents in the United States, Flava Flav shouts “I got a right to be hostile!” given this terrible history. A poster of that album is on the wall of N’Jadaka’s father’s apartment in Oakland, and it frames, like the glass case in the museum, the young man’s views of the world in which his ancestors have been constantly subjugated.

Blade Runner 2049 is even more unrelentingly pessimistic about the future and its connection to the past. In the movie’s opening, we are told that the documentary evidence of that past has been wiped out in a catastrophic electronic pulse that destroyed digital photographs and electronic records. As we learn, however, not all archives are lost. While personal images and documents that were never printed are gone forever, some plutocratic corporations maintain archival records, and we see several of them in the film: digital media as well as formats encased in glass spheres and more recognizable microfilm. Nevertheless, these archives are imperfect, like so much in the film. Even a leather-bound handwritten book of records in a wasteland orphanage has critical pages ripped out.

Because it is based on the work of Philip K. Dick, who was obsessed with libraries as part of a larger obsession with memory and reality, Blade Runner 2049 ultimately binds not only the past and present together, but the archival and the alive. Humans and replicants, the movie seems to argue, are simply incarnations of archival records, fleshy beings made up of the synthetic or parental DNA that form their core information architecture and the libraries of memories that are either fabricated or lived. This uneasy fusion is at the dark core of the film and its philosophical examination of the permeable boundary between the real and the artificial.

For all of these films, the past constantly threatens to come back to haunt the present. (Just ask those on the Death Star.) In turn, these big-screen portrayals of imagined libraries, archives, and museums should make us reconsider how what we preserve and make accessible reflects—and perhaps determines—who we really are.

Last summer, a few blocks from my house, a new pub opened. Normally this would not be worth noting, except for the fact that this bar is staffed completely by pirates, with eye patches, swords, and even the occasional bird on the shoulder. These are not real pirates, of course, but modern men and women dressed up as pirates. But they wear the pirate garb with no hint of irony or thespian affect whatsoever; these are dedicated, earnest pirates.

Last summer, a few blocks from my house, a new pub opened. Normally this would not be worth noting, except for the fact that this bar is staffed completely by pirates, with eye patches, swords, and even the occasional bird on the shoulder. These are not real pirates, of course, but modern men and women dressed up as pirates. But they wear the pirate garb with no hint of irony or thespian affect whatsoever; these are dedicated, earnest pirates.